Adversarial interaction

How users can win with online platforms

My research focuses on something I call adversarial interaction.

Adversarial interaction is when people use online platforms strategically and in unexpected ways. But why would a user want to interact adversarially?

The most obvious example of adversarial interaction is adblocking. A website might instruct your browser to run some Javascript code to track you on the web and then use that information to allow advertisers to bid for the right to tell your web browser to execute some code that ultimately displays their advertisement. An adblocker is a piece of software that looks at these suggestions by a platform and says, “No thanks!”

In the broad picture, users and platforms are often in conflict. What a user wants may not be good for business. A product’s interface is a kind of choice architecture: a way of setting things up that makes some choices easy to make and others hard to make. Entering into someone else’s choice architecture has ramifications for the user’s autonomy—will they be able to make the choices they want to make when interacting with the system or will there be engineered friction to realizing certain outcomes?

Our core insight is that the interface between most online software products and the user is contestable. Normally when interacting with some online service, a user downloads code and data from the platform and executes the code as instructed on the data, as the platform expects. However, this code, and as a result the product design itself along with the choices it favors or disfavors, is often just a suggestion. Users can theoretically execute code in unexpected ways to co-author the product design in order to achieve the outcomes they prefer more often.

We look at user-platform conflicts using tools from the fields human-computer interaction and security, incentives in online systems using tools from game theory and mechanism design, and ways that users can use software to modify their interactions to favor themselves rather than platforms in these conflicts.

Besides adblocking, another example would be a user who wants to spend less time on Facebook. Facebook wants you to spend more time on Facebook; whether you do or not is core to their success as a business. As a result, Facebook does not offer you a neutral environment in which to make choices about how much time to spend on its site. Instead, Facebook is designed to be addictive. The notifications it sends, the ratio of pictures and videos to text content, and the speed with which is loads an endless stream of content are all the result of an effort to get users to spend more time on Facebook. But rendering the site based on their chosen design is a suggestion, not actually a requirement.

You could imagine a tool where users specify how much they’d like to use Facebook. Once this usage threshold is reached, the tool might degrade addictive design patterns in Facebook to make the user experience more frustrating—by washing out colors, blurring text, and making the site load more slowly.

Overall, we want to know what can be achieved by viewing the interface as a contested space, by what means and at what cost?

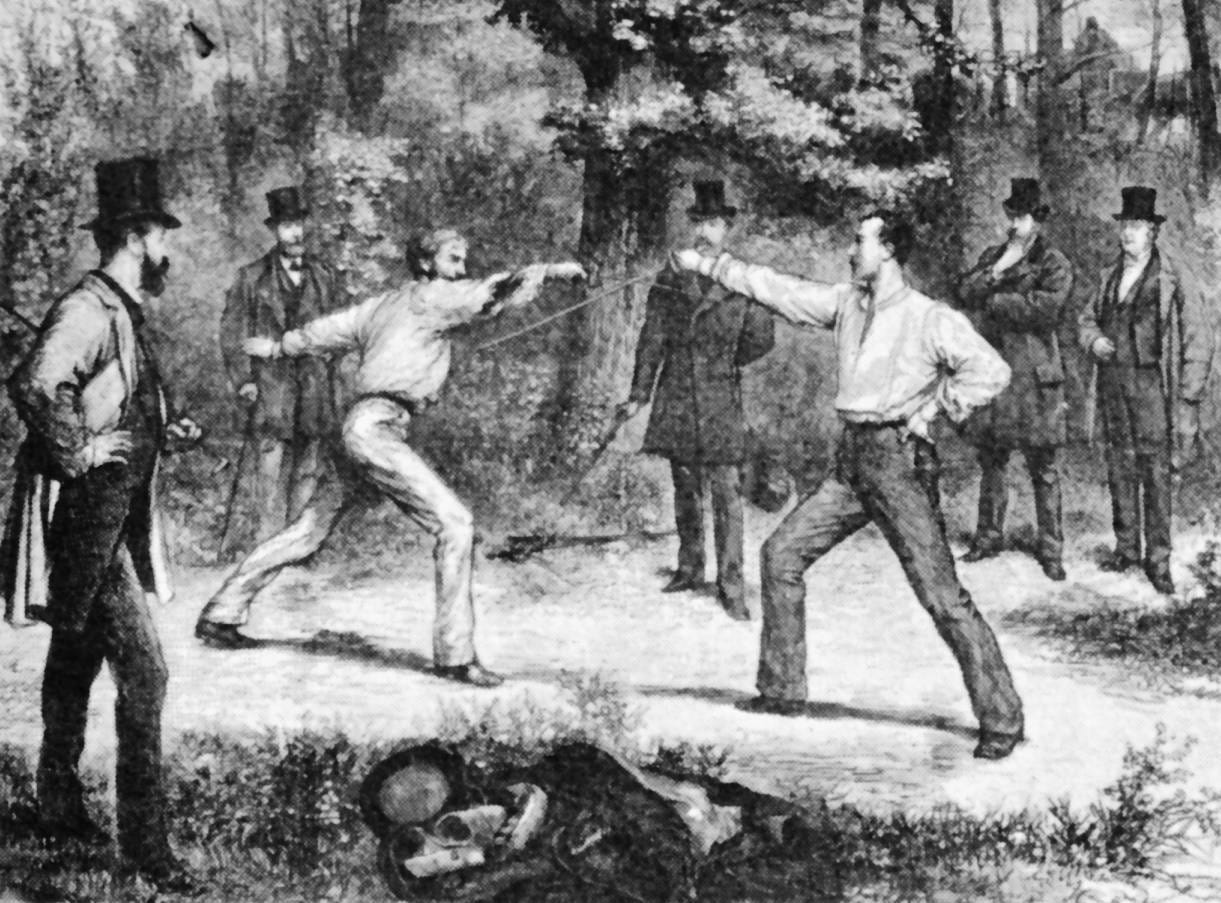

Image: The Code Of Honor—A Duel In The Bois De Boulogne, Near Paris, wood-engraving after Godefroy Durand, Harper's Weekly (January 1875). Credit: Wikipedia